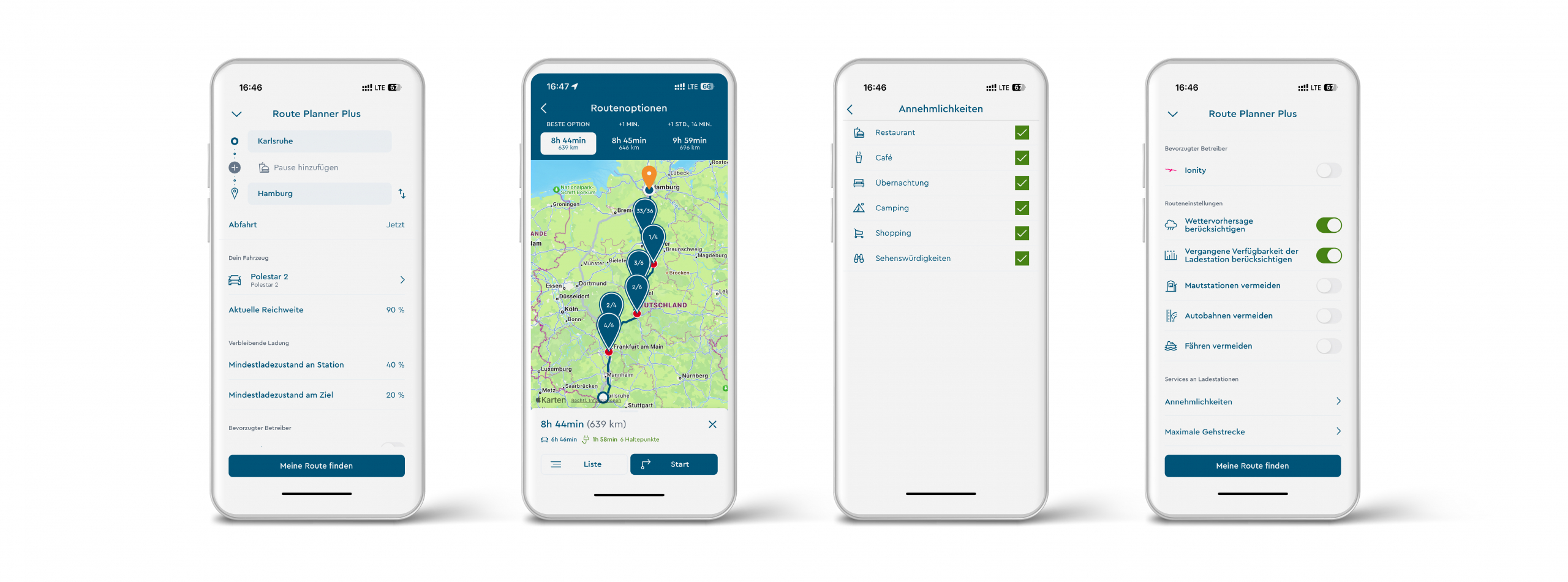

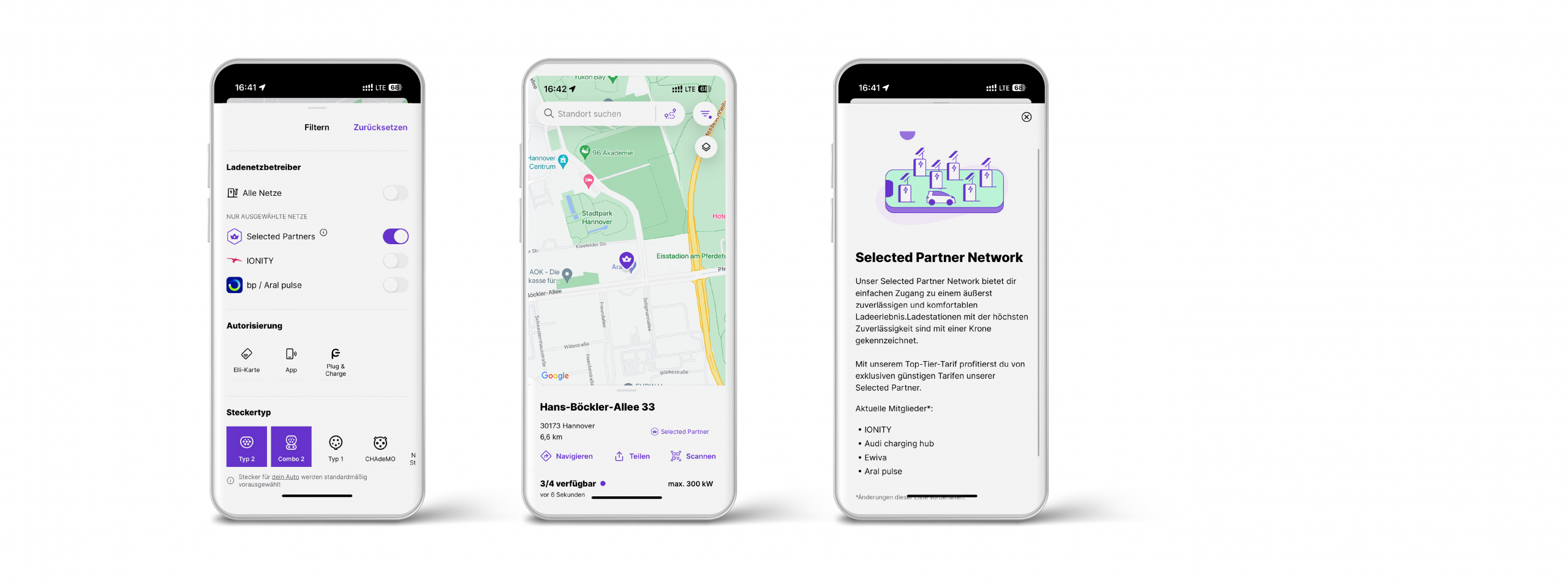

The correlation of the results of the task adequacy criterion with the functional benchmark can be explained by the selection of the main use case. Three apps achieve scores of over 80 points and enable appropriate and fast execution of the use case. Significant deductions were made for apps in the field that did not allow the use case to be executed due to a lack of functionalities or functionalities that could not be found by testers (e.g. filters or favorising the charging point).

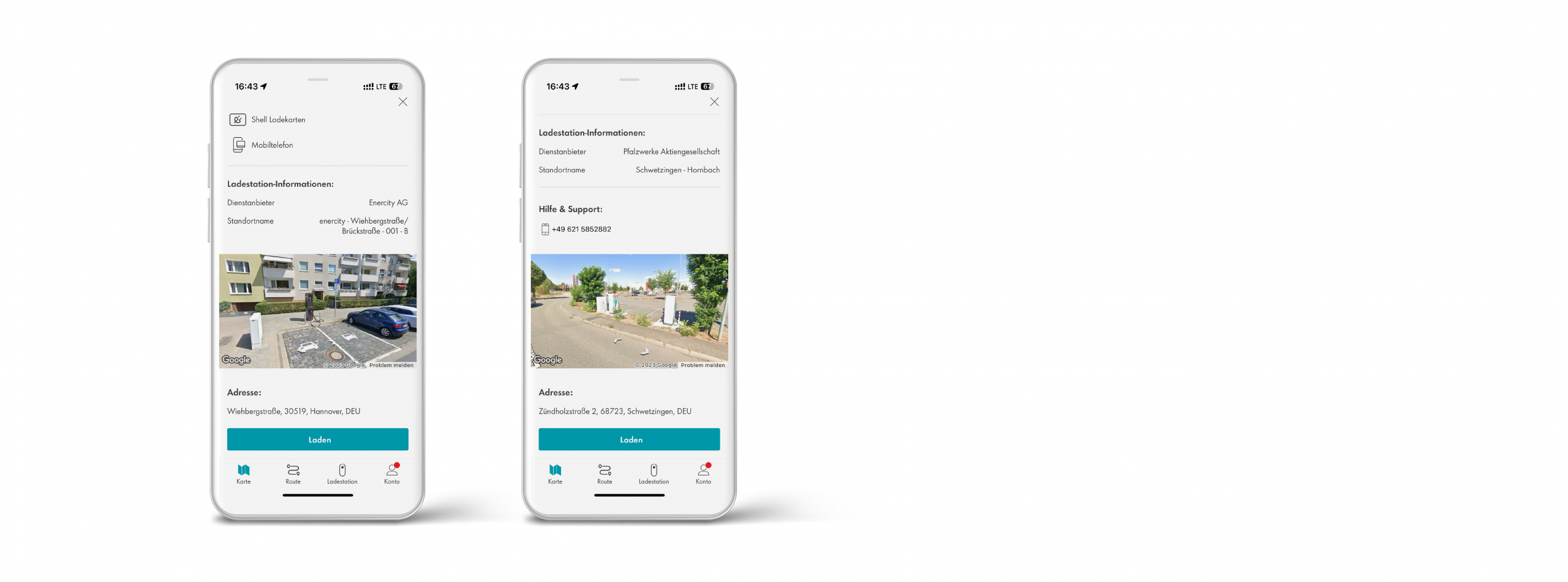

In terms of self-description capability, this year’s benchmark identified good results with over 70 points on average, which speaks in favour of a good information base in the tested apps. Informative previews were rated positively overall. This refers to small overviews of the most important key data of the charging station that do not cover the entire map section. Our testers made clear deductions for some apps that only provide price information at click level 3 or 4, making it difficult for users to get a targeted overview of costs.

The results in the area of expectation conformity naturally depend heavily on the previous usage experience of apps in general. Many of the apps tested are positively orientated towards the patterns users have learned so far: They use, for example, the preview functionality to show the most important information about the charging point. Testers also expected a clear overview at different zoom levels on the map overview, which can be achieved by clustering the respective charging stations. In this category, 12 of the apps tested scored over 70 points and were therefore able to achieve good results. Maingau Autostrom and Shell Recharge even achieved very good results in this dimension with 87.5 points.

Points of 61 to 85 were achieved in the learnability section of the test. For the most part, testers found it easy to learn using the apps, although our testers noticed some limitations with complicated filter logic.

In the controllability dimension, seven applications achieved very good results of over 80 points.

For example, the testers positively emphasised in this interaction principle that it is sometimes possible to choose between a list and map view when searching for charging stations.

Robustness against user errors is tested, among other things, with the slightly incorrect input of places and points of interest. Differences between the apps can also be seen here: fast and correct suggestions or no error correction with no search success are the result of the tests and are included in the UX evaluation. Colour logics in the apps, which can sometimes trigger user errors (e.g., no indication of whether a charging station is available), also lead to points being deducted. Overall, the results in the “Robustness against user errors” dimension are very broad, ranging from 40 to 87 points.

The final dimension, user engagement, is assessed primarily via an inviting and appealing design, as well as the possibility of submitting suggestions for improvement being transparently offered. This shows a very different implementation of the applications in terms of how the topic of user feedback is dealt with. In some cases, feedback functions are hidden or only provided via a contact option. In other MSP applications, the option to provide feedback is directly visible and possible via several communication channels (e.g., WhatsApp, chat, email). In this area, nine apps scored over 80 points with very good results.